In big data scenarios, raw and unorganized data is often stored in various relational and nonrelational storage systems. Without proper context or meaning, this raw data can’t provide valuable insights to analysts, data scientists, or decision-makers.

To transform raw data into actionable business insights, you need to orchestrate and operationalize complex processes. Big data processing requires the coordination and management of data processing tasks. The data must be transformed, cleaned, and stored in a way that allows for easy consumption by downstream applications and services.

Azure Data Factory enables you to move and transform data from various sources to various destinations. It’s a managed cloud service designed specifically for handling complex hybrid extract-transform-load (ETL), extract-load-transform (ELT), and data integration projects. This article describes how you can use Azure Data Factory to create, schedule, and manage data pipelines.

Usage scenarios

Imagine a gaming company that accumulates petabytes of game logs from cloud-based games. The company wants to analyze these logs to understand customer preferences, demographics, and usage behavior. From this analysis, the company hopes to identify opportunities for up-selling and cross-selling, develop new features, drive business growth, and enhance the customer experience.

To analyze these logs, the company needs to utilize reference data, such as customer information, game details, and marketing campaign data stored in an on-premises data store. They want to combine this data with log data from a cloud data store.

The company plans to process the combined data using a Spark cluster in the cloud (Azure HDInsight) and to publish the transformed data to a cloud data warehouse like Azure Synapse Analytics for easy reporting. They aim to automate this workflow. They intend to monitor and manage it on a daily schedule, and execute it when files arrive in a blob store container.

Azure Data Factory addresses these data scenarios as a cloud-based ETL and data integration service that enables the creation of data-driven workflows for orchestrating data movement and transformation at scale. The data-driven workflows are called pipelines. With Azure Data Factory, the gaming company develops and schedules pipelines to ingest data from various data stores. It creates complex ETL processes that transform data using data flows or compute services like Azure HDInsight Hadoop, Azure Databricks, and Azure SQL Database. The company publishes transformed data to data stores like Azure Synapse Analytics, where it’s available to business intelligence (BI) applications.

Azure Data Factory helps the gaming company make better business decisions by organizing raw data into meaningful data stores and data lakes.

How does it work?

Data Factory contains a series of interconnected systems that provide a complete end-to-end platform for data engineers.

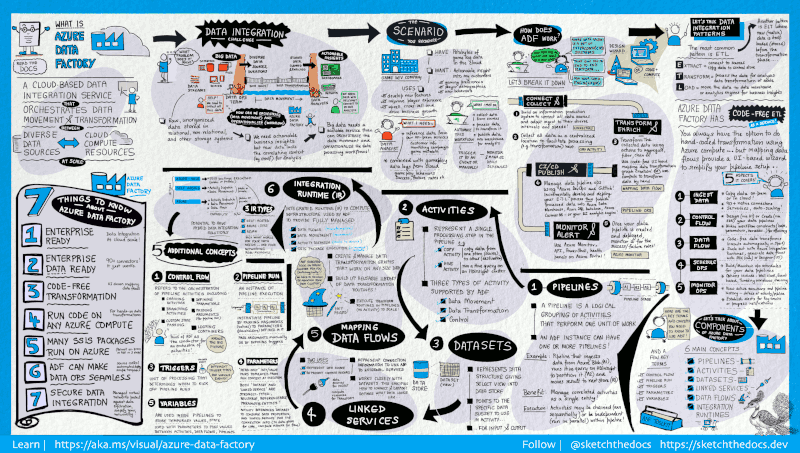

This visual guide provides a detailed overview of the complete Data Factory architecture:

To see more detail, select the preceding image to zoom in, or browse to the high resolution image.

The following sections provide an overview of the end-to-end system.

Connect and Collect

Enterprises manage various types of data from different sources. The types of data can include structured, unstructured, and semi-structured. The sources may reside on-premises or in the cloud. The data might arrive at different intervals and velocities.

The first step in building an information production system is to connect to all required data and processing sources. The sources can include SaaS services, databases, file shares, and FTP web services. The next step is to move the data as needed to a centralized location for further processing.

Without Data Factory, you have to build custom data movement components or write custom services to integrate these data sources and processing. Custom solutions can be expensive, and difficult to integrate and maintain. They often lack the enterprise-grade monitoring, alerting, and controls that a fully managed service can offer.

With Data Factory, you use the Copy Activity in a data pipeline to move data from both on-premises and cloud source data stores to a centralized data store in the cloud. For example, you can collect data in Azure Data Lake Storage and later transform the data using an Azure Data Lake Analytics compute service. Or, you can collect data in Azure Blob storage and transform it later using an Azure HDInsight Hadoop cluster.

Transform and Enrich

After you’ve centralized the data, you can use mapping data flows to process or transform the collected data. Data flows enable data engineers to build and maintain data transformation graphs that execute on Spark without needing to understand Spark clusters or Spark programming.

If you prefer to manually create transformations, Data Factory supports external activities for executing your transformations on compute services such as HDInsight Hadoop, Spark, Data Lake Analytics, and Machine Learning.

CI/CD and Publish

Data Factory offers full support for CI/CD of your data pipelines using Azure DevOps and GitHub. You can incrementally develop and deliver your ETL processes before publishing the finished product. After you’ve refined the raw data into a consumable form, load the data into Azure Data Warehouse, Azure SQL Database, Azure Cosmos DB, or any other analytics engine. Your business users can access this data from their business intelligence tools.

Monitor

After successfully building and deploying your data integration pipeline, monitor the scheduled activities and pipelines for success and failure rates. Azure Data Factory has built-in support for pipeline monitoring via Azure Monitor, API, PowerShell, Azure Monitor logs, and health panels on the Azure portal.

Top-level concepts

An Azure subscription might have one or more Azure Data Factory instances (or data factories). The key components of Azure Data Factory are:

- Pipelines

- Activities

- Datasets

- Linked services

- Mapping data flows

- Integration Runtimes

- Triggers

- Control flows

Each of these components plays a role in building and executing data-driven workflows. They’re described in greater detail in the following sections.

Pipelines

Pipelines are logical groupings of activities that perform a unit of work. A pipeline allows you to manage activities as a set and chain them together to achieve specific data processing goals. For example, a pipeline can contain a group of activities that ingest data from an Azure blob, and then runs a Hive query on an HDInsight cluster to partition the data.

A pipeline run is an instance of the pipeline execution. It’s instantiated by passing arguments to parameters defined in pipelines. The arguments for a pipeline run can be passed manually or within a trigger definition. For more information, see Pipeline execution and triggers in Azure Data Factory or Azure Synapse Analytics.

Parameters are key-value pairs of read-only configuration. They’re defined in the pipeline and passed during execution from the run context.

Variables store temporary values inside pipelines. They can be used with parameters to pass values between pipelines, data flows, and activities.

Activities

Activities are processing steps in a pipeline. There are three types of activities in Azure Data Factory:

- Data movement activities: move data between sources and destinations

- Data transformation activities: transform data using various compute services

- Control activities: orchestrate and control the flow of activities in a pipeline

For example, you might use a copy activity to copy data from one data store to another data store. Or, you might use a Hive activity, which runs a Hive query on an Azure HDInsight cluster, to transform or analyze your data.

Datasets

Datasets represent data structures within data stores. They point to or reference data used in activities as inputs or outputs.

A dataset is a strongly typed parameter. An activity can reference datasets and consume the properties that are defined in the dataset definition.

Linked services

A linked service is a strongly typed parameter that defines connection information for Data Factory to connect to external resources. They represent data stores and compute resources that are used in the execution of activities.

For example, an Azure Storage-linked service specifies a connection string to connect to the Azure Storage account. Additionally, an Azure blob dataset specifies the blob container and the folder that contains the data.

Linked services are used for two purposes in Data Factory:

- To represent a data store that includes, but isn’t limited to, a SQL Server database, Oracle database, file share, or Azure blob storage account. For a list of supported data stores, see the copy activity article.

- To represent a compute resource that can host the execution of an activity. For example, the HDInsightHive activity runs on an HDInsight Hadoop cluster. For a list of transformation activities and supported compute environments, see the transform data article.

Mapping data flows

Mapping data flows enable you to build and maintain data transformation graphs. Execute the data transformation graphs on Spark clusters for scalable and efficient data processing. You can build a reusable library of data transformation routines and execute those processes in a scaled-out manner from your pipelines. The Spark cluster spins-up and spins-down when you need it. You don’t have to manage or maintain clusters.

Integration runtime

Integration runtime serves as a bridge between activities and linked services. It provides the compute environment where the activity either runs or gets dispatched from.

The integration runtime is referenced by the linked service or activity. The activity is performed in the closest region to the target data store or compute service. It ensures the most performant execution of the activity while meeting security and compliance needs.

Triggers

Triggers determine when to start a pipeline. There are different types of triggers, such as time-based triggers or event-based triggers. For more information, see Pipeline execution and triggers in Azure Data Factory or Azure Synapse Analytics.

Control flows

Control flow is the orchestration of pipeline activities. It includes chaining activities in a sequence, branching, defining parameters at the pipeline level, and passing arguments while invoking the pipeline on-demand or from a trigger. It also includes custom-state passing and looping containers. With control flow, you can create complex data processing workflows.

Next steps

Here are important next step documents to explore:

- Dataset and linked services

- Pipelines and activities

- Integration runtime

- Mapping Data Flows

- Data Factory UI in the Azure portal

- Copy Data tool in the Azure portal

- PowerShell

- .NET

- Python

- REST

- Azure Resource Manager template